How Coordinated Misinformation Is Reshaping Public Opinion Across Bharat

Fact-check reports from January 2026 reveal how false claims, edited visuals, and identity-driven narratives are shaping public opinion faster than corrections can respond.

Total Views |

January 2026 offered yet another reminder of how easily facts can be bent, blurred, or entirely replaced in today’s digital age. A close reading of the domestic fact-check report for the month shows that misinformation is no longer an occasional slip or misunderstanding. It has become a steady undercurrent in public life, shaping opinions long before the truth has a chance to catch up.

Throughout the month, dozens of misleading claims travelled rapidly across social media, often reaching thousands of users within hours. Platforms such as X, Facebook, and Instagram functioned less as spaces for dialogue and more as accelerators of unverified information. By the time clarifications emerged, many narratives had already taken root in public consciousness, making correction significantly more difficult.

Politics remained at the centre of much of this misinformation. Claims related to government schemes, welfare programmes, and administrative decisions circulated widely, often relying on selective facts or misleading conclusions. In several cases, routine policy details were presented as dramatic rollbacks or exclusions. Although these claims were later disproved, they succeeded in generating confusion and distrust, particularly among communities directly affected by such schemes.

फैक्ट चेकः मोहन भागवत ने सेना से 50% दलित-OBC को हटाने का बयान नहीं दिया, फेक वीडियो वायरल | Dfrac

— DFRAC (@DFRAC_org) January 8, 2026

.

.#mohanbhagwat #rss pic.twitter.com/y5DhQyaH1m

Visual misinformation proved especially potent. Edited photographs and cropped video clips involving political leaders spread widely, frequently stripped of their original context. Ordinary moments were reframed as evidence of conflict or misconduct. For many viewers, seeing amounted to believing, and the emotional impact of such visuals often outweighed factual explanations that followed.

More troubling was the deliberate use of religious and caste identities to lend emotional weight to false narratives. Claims of targeted action, humiliation, or discrimination circulated without verification, tapping into deep-seated fears and social sensitivities. Such content intensified existing divisions and made rational engagement more difficult.

Politics and Governance: False Claims That Hit Headlines

Several prominent examples illustrated how misinformation distorted public understanding of governance during the month:

सोशल मीडिया पर साझा किए गए एक वीडियो में दावा किया गया है कि महात्मा गांधी राष्ट्रीय ग्रामीण रोजगार गारंटी अधिनियम (MGNREGA) में पहले कानूनी न्यूनतम मज़दूरी की गारंटी थी, लेकिन अब (VBGRAMG) में मज़दूरी मनमाने ढंग से तय की जाएगी।#PIBFactCheck

— PIB Fact Check (@PIBFactCheck) January 18, 2026

❌ यह दावा भ्रामक है।

✅ रोज़गार… pic.twitter.com/vr4R8xfgSD

MNREGA Misrepresentation: A viral post falsely claimed that the legal guarantee for minimum wages under MGNREGA had been removed, creating anxiety among beneficiaries.

Citizenship Allegations Against Mohammed Shami: A politically charged post alleged that the cricketer had been asked to prove his nationality, a claim that lacked any factual basis.

Ladli Laxmi Yojana Misstatement: A senior leader’s social media post asserted that an 80 percent marks criterion was mandatory to receive benefits under the scheme. Fact-checkers later found that the claim misrepresented the actual government policy.

Communalism and Social Faultlines

Misinformation exploiting communal and caste identities featured prominently in January, often carrying the potential to inflame tensions:

Bareilly Namaz Narrative: A claim that police had arrested 12 Muslim men for offering prayers in a private residence circulated widely. Authorities later clarified that the individuals were detained for administrative reasons, not as an act of religious oppression.

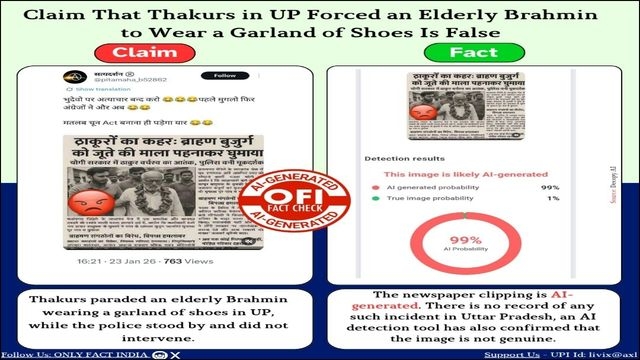

Caste Conflict Fabrications: A widely shared post alleging that a Brahmin man in Uttar Pradesh was forced to wear a garland of shoes was exposed as an AI-generated fabrication.

Caste Angle in a Murder Case: Attempts to portray a murder case as caste-based were debunked after investigations revealed no evidence of caste motivation.

Fact-checks eventually demonstrated that many of these stories were exaggerated or entirely fabricated. However, even their brief circulation online proved sufficient to inflame emotions and deepen suspicion between communities.

January also marked a noticeable rise in AI-generated misinformation. Digitally altered images, synthetic voices, and fabricated visuals increasingly appeared in false stories, lending them an air of authenticity. These creations were often polished and convincing, making it more challenging for ordinary users to distinguish between reality and fabrication. As artificial intelligence tools become more accessible, the boundary between truth and falsehood continues to blur.

The spread of misinformation did not remain confined to political actors alone. Influencers, viral pages, and pseudo-news accounts played a significant role in amplifying false claims, often driven by the pursuit of engagement rather than ideology. In doing so, they contributed to an online environment where sensationalism frequently outweighed responsibility.

Fact-checking organisations and official verification units worked consistently throughout the month to counter these narratives. Their interventions prevented several false claims from escalating further. Yet the report underscores a fundamental limitation: fact-checking is inherently reactive. It responds to damage after it has already begun, rather than preventing it at the source.

The patterns observed in January 2026 underline a broader challenge facing the digital public sphere. As misinformation grows more coordinated and technologically sophisticated, the task of safeguarding informed public discourse becomes increasingly complex.

Written by

Kewali Kabir Jain

Journalism Student, Makhanlal Chaturvedi National University of Journalism and Communication